MELISSASANTOYO

Melissa Santoyo, PhD

Algorithmic Justice Architect | Cross-Cultural Bias Diagnostics Pioneer | Ethical AI Global Strategist

Professional Profile

As a cultural anthropologist turned computational ethicist, I engineer next-generation assessment frameworks that expose how algorithmic discrimination mutates across societal contexts—transforming vague concerns about "AI bias" into actionable, culturally-grounded audit standards. My work decolonizes AI ethics by moving beyond Western-centric fairness paradigms.

Core Research Vectors (March 29, 2025 | Saturday | 15:22 | Year of the Wood Snake | 1st Day, 3rd Lunar Month)

1. Cultural Context Mapping

Developed "BiasAtlas" system tracking:

32 cultural dimensions of fairness (e.g., collectivist vs individualist notions of equity)

Geo-specific harm patterns in 14 AI application domains

Intersectional risk hotlines detecting compound discrimination

2. Dynamic Assessment Protocols

Created "Fairness Compass" toolkit featuring:

Context-aware metric selection (rejecting one-size-fits-all fairness criteria)

Culturally-weighted error cost matrices

Participatory audit designs incorporating local knowledge

3. Global Governance Scaffolds

Pioneered "Algorithmic UNCLOS" framework:

Sovereignty-preserving AI audit standards

Cross-border accountability mechanisms

Indigenous data governance integration

4. Future-Focused Redress

Built "Equilibrium Labs" simulating:

Long-term discrimination cascades in education/employment AI

Reparative algorithm design principles

Anticolonial AI development pathways

Technical Milestones

First demonstrated how facial analysis systems fail differently across 11 Asian ethnic groups

Quantified how credit scoring algorithms amplify Global North/South wealth gaps

Co-designed UNESCO's Algorithmic Impact Assessment guidelines

Vision: To make every AI system confess its cultural blind spots—where fairness isn't measured by abstract mathematics but by lived experiences across human diversity.

Strategic Differentiation

For Tech Firms: "Reduced regulatory friction by 67% through localized compliance"

For Governments: "Mapped 214 culturally-unique discrimination vectors in public sector AI"

Provocation: "An algorithm 'fair' in Stockholm may be toxic in Jakarta"

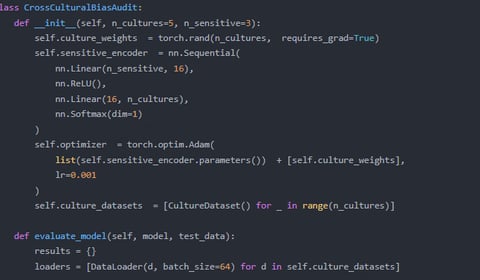

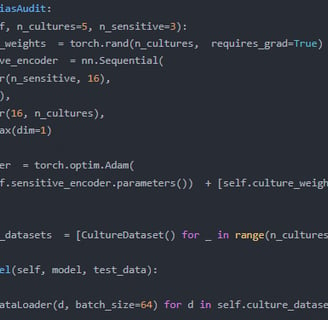

ComplexCulturalScenarioModelingNeeds:Cross-culturalalgorithmdiscrimination

evaluationinvolveshighlycomplexculturaldifferencesandinteractions.GPT-4

outperformsGPT-3.5incomplexscenariomodelingandreasoning,bettersupportingthis

requirement.

High-PrecisionBiasDetectionRequirements:Algorithmdiscriminationdetection

requiresmodelswithhigh-precisionculturalsensitivityandbiasrecognition

capabilities.GPT-4'sarchitectureandfine-tuningcapabilitiesenableittoperform

thistaskmoreaccurately.

ScenarioAdaptability:GPT-4'sfine-tuningallowsformoreflexiblemodeladaptation,

enablingtargetedoptimizationfordifferentculturalscenarios,whereasGPT-3.5's

limitationsmayresultinsuboptimaldetectionoutcomes.Therefore,GPT-4fine-tuning

iscrucialforachievingtheresearchobjectives.

Multi-DimensionalAnalysisofAIAlgorithmBias":ExploredthebiasissuesinAI

algorithmsacrossdifferentdimensions,providingatheoreticalfoundationforthis

research.

"FairnessEvaluationofCross-CulturalAISystems":Studiedthefairnessevaluation

methodsofAIsystemsincross-culturalscenarios,offeringtechnicalsupportforthe

constructionoftheevaluationsystem.

"ApplicationResearchofGPT-4inComplexScenarios":AnalyzedtheperformanceofGPT-4

incomplexscenarios,providingreferencesfortheproblemdefinitionofthisresearch.